Cascading failures represent a significant threat to the stability and reliability of any large-scale online service. These failures, characterized by their escalating nature, can rapidly degrade performance and lead to widespread outages. In the context of Google’s infrastructure, even seemingly “small” servers play a crucial role, and understanding their traffic handling capacity is paramount to preventing such events. This article delves into the intricacies of cascading failures, their causes, and the strategies employed to mitigate them, drawing insights from Google’s Site Reliability Engineering (SRE) practices.

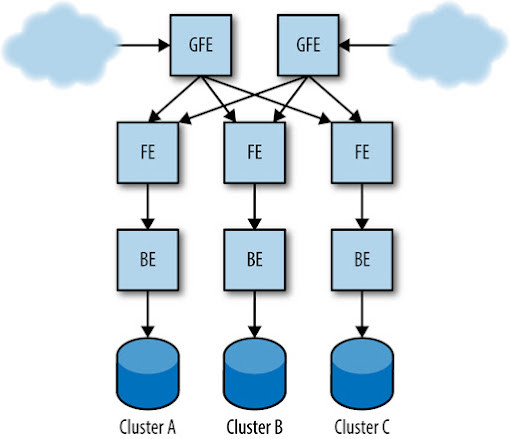

Example production configuration for the Shakespeare search service.

Example production configuration for the Shakespeare search service.

Understanding the Roots of Cascading Failures and Preventative Design

A cascading failure is essentially a domino effect within a system. It begins with a localized failure, which, through positive feedback loops, amplifies and propagates across the system, potentially leading to a complete service disruption. Imagine a scenario where a single server replica becomes overloaded. This overload can trigger a chain reaction: the burdened replica falters, increasing the load on the remaining replicas. If these remaining replicas are near their capacity, the additional stress can push them over the edge, causing them to fail as well. This domino effect can rapidly cascade, taking down the entire service.

To illustrate, let’s consider a simplified example, the Shakespeare search service. In a typical setup, this service might be distributed across multiple clusters to handle user requests efficiently and ensure redundancy.

Server Overload: The Primary Culprit

Server overload stands out as the most frequent initiator of cascading failures. Many cascading scenarios, in fact, directly stem from server overload or variations of this core problem.

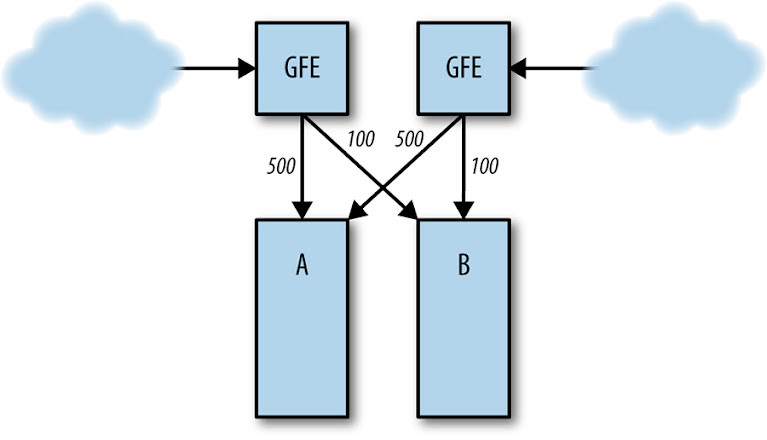

Consider a situation where the frontend in cluster A is managing a steady flow of 1,000 requests per second (QPS). This represents its normal operating capacity.

Normal server load distribution between clusters A and B.

Normal server load distribution between clusters A and B.

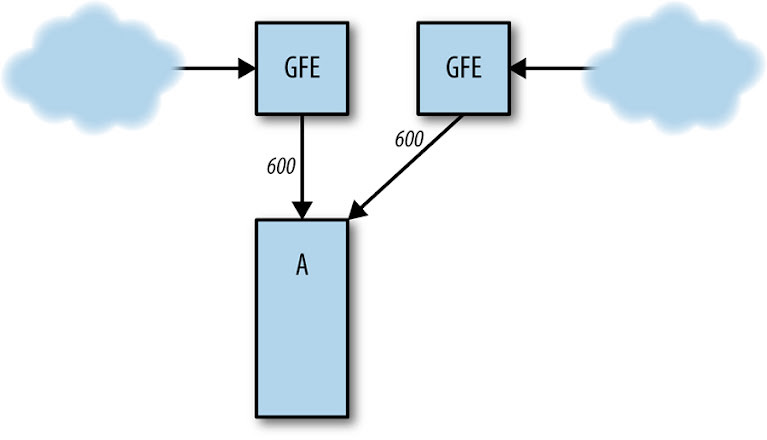

Now, imagine cluster B experiences a failure. Traffic intended for cluster B is now rerouted to cluster A. Suddenly, cluster A is bombarded with 1,200 QPS. This surge in requests exceeds cluster A’s designed capacity. The frontends in cluster A, unable to cope with the increased load, begin to struggle. They may exhaust resources, crash, miss critical deadlines, or exhibit other erratic behaviors. Consequently, the rate of successfully processed requests in cluster A plummets below the original 1,000 QPS baseline.

Cluster B fails, sending all traffic to cluster A.

Cluster B fails, sending all traffic to cluster A.

This localized overload in cluster A can quickly spread to other parts of the system, potentially leading to a global service outage. For instance, as servers in cluster A crash due to overload, the load balancer, in an attempt to maintain service availability, might redirect requests to other clusters. However, if these clusters are also operating near their capacity, they too can become overloaded, triggering a service-wide cascading failure. The speed at which this escalation occurs can be alarmingly rapid, sometimes within minutes, as load balancers and task scheduling systems react swiftly to perceived server failures.

Resource Exhaustion: Fueling the Cascade

Running out of critical resources is another major factor that can trigger or exacerbate cascading failures. Resource exhaustion can manifest in various ways, such as increased latency, elevated error rates, or a reduction in the quality of service. These are often deliberate mechanisms designed to signal that a server is approaching its limits. When a server exhausts a resource, its efficiency diminishes, and in severe cases, it can crash. This crash then prompts the load balancer to redistribute the load, potentially spreading the resource exhaustion problem to other servers and initiating a cascade.

Different types of resource exhaustion have distinct impacts on server behavior:

CPU Starvation

Insufficient CPU processing power directly translates to slower request handling across the board. This CPU bottleneck can lead to a cascade of secondary issues:

- Increased In-Flight Requests: Slower processing times mean requests remain active for longer, leading to a higher number of concurrent requests. This increased concurrency strains almost all server resources, including memory, threads, file descriptors, and backend dependencies.

- Excessive Queue Lengths: If the incoming request rate consistently surpasses the server’s processing capacity, request queues will saturate. This results in increased latency as requests spend more time waiting in the queue and also consumes additional memory for queue management.

- Thread Starvation: In scenarios where threads are waiting for locks or other resources, health checks might fail if the health check endpoint cannot be served within the expected timeframe, leading to false positives and potential server restarts.

- CPU or Request Starvation (Watchdogs): Internal monitoring systems (“watchdogs”) within the server, or external watchdog systems, can detect a lack of progress. This can lead to server crashes due to perceived CPU starvation or request starvation if watchdog events are triggered remotely and processed as part of the already overloaded request queue.

- Missed RPC Deadlines: As servers become overloaded, responses to Remote Procedure Calls (RPCs) from clients are delayed. These delays can exceed predefined deadlines set by clients. The server’s processing effort becomes wasted as clients may abandon the call and retry, further amplifying the overload.

- Reduced CPU Caching Efficiency: Increased CPU utilization often leads to context switching across more CPU cores. This reduces the effectiveness of CPU caches, as data is less likely to be readily available in the cache, leading to decreased CPU efficiency.

Memory Depletion

Memory exhaustion, even in its simplest form of increased in-flight requests consuming RAM for request/response objects, can have severe consequences:

- Task Termination: The container manager (virtual machine or container runtime) might forcibly terminate tasks exceeding memory limits. Application-level crashes due to out-of-memory errors can also lead to task failures.

- Garbage Collection (GC) Spirals (Java): In Java environments, memory pressure triggers more frequent and intense garbage collection cycles. This creates a vicious cycle: increased GC consumes more CPU, further slowing down request processing, leading to increased RAM usage, prompting even more GC activity, and ultimately reducing available CPU even further – the “GC death spiral.”

- Cache Hit Rate Degradation: Reduced available RAM directly impacts application-level caches. Smaller caches and lower hit rates mean more requests need to be served from slower backends, potentially overloading those backends.

Thread Starvation

Thread starvation can directly cause errors or trigger health check failures. If a server dynamically creates threads to handle increasing load, the overhead of managing these threads can consume excessive RAM. In extreme situations, thread exhaustion can even lead to process ID (PID) exhaustion.

File Descriptor Limits

Running out of file descriptors can prevent a server from establishing new network connections. This inability to connect can cause health checks to fail, leading to the server being marked as unhealthy and potentially removed from the load balancing pool.

Interdependencies of Resource Exhaustion

It’s crucial to recognize that these resource exhaustion scenarios are often interconnected and can feed into each other. An overloaded service typically exhibits a complex web of symptoms. What appears to be the root cause might actually be a secondary effect in a chain of events, making diagnosis challenging.

Consider this complex but realistic scenario:

- A Java-based frontend service has suboptimal garbage collection (GC) settings.

- Under normal, but high, traffic load, the frontend experiences CPU exhaustion due to excessive GC activity.

- CPU exhaustion slows down request completion times.

- The increased duration of requests leads to a larger number of concurrent in-progress requests, increasing RAM usage for request processing.

- Memory pressure from requests, combined with a fixed memory allocation for the frontend process, reduces the RAM available for caching.

- The smaller cache size leads to fewer cached entries and a lower cache hit rate.

- Increased cache misses force more requests to be forwarded to the backend service.

- The backend service, now facing increased load, becomes CPU or thread-constrained.

- Finally, the backend’s CPU exhaustion triggers basic health check failures, initiating a cascading failure.

In such intricate scenarios, pinpointing the exact causal chain during an active outage is often difficult. It might be extremely challenging to determine that the backend crash was ultimately triggered by a reduced cache hit rate in the frontend, especially if the frontend and backend are managed by different teams or operate independently.

Service Unavailability: The Downward Spiral

Resource exhaustion can ultimately lead to server crashes. For example, excessive RAM consumption by a container can cause the container to be terminated. Once even a few servers crash due to overload, the remaining servers bear an even heavier load, accelerating their path to failure. This creates a snowball effect, quickly leading to a state where all servers are caught in a crash loop. Escaping this state is often difficult because newly started servers are immediately overwhelmed by the backlog of requests and fail almost instantly.

Imagine a service that operates healthily at 10,000 QPS but enters a cascading failure at 11,000 QPS due to server crashes. Simply reducing the load back to 9,000 QPS is unlikely to resolve the crashes. This is because the service is now trying to handle increased demand with significantly reduced capacity. Only a small fraction of servers might be healthy enough to process requests. The proportion of healthy servers depends on factors like the speed of task startup, the time it takes for a binary to reach full serving capacity, and how long a newly started task can withstand the intense load. In this example, if only 10% of servers are healthy, the request rate might need to drop to around 1,000 QPS for the system to stabilize and begin recovery.

Similarly, servers can become “unhealthy” from the perspective of the load balancing system without actually crashing. They might enter a “lame duck” state (gracefully refusing new requests while finishing existing ones) or simply fail health checks. The effect is similar to server crashes: fewer servers are available to handle requests, and the healthy servers are quickly overwhelmed before they too become unhealthy.

Load balancing policies that actively avoid servers that have recently served errors can further worsen the situation. If a few backends start serving errors, they are removed from the active pool, reducing the overall capacity. This concentrates the load on the remaining servers, accelerating the cascading failure.

Strategies for Preventing Server Overload

Preventing server overload is crucial for maintaining system stability. Here are key strategies, presented in order of priority:

-

Rigorous Load Testing and Failure Mode Analysis: This is the most critical step. You must thoroughly test your server’s capacity limits and understand its failure behavior under overload conditions. Without realistic testing, it’s nearly impossible to accurately predict which resource will be exhausted first and how that exhaustion will manifest. Load testing should simulate real-world traffic patterns and gradually increase load to identify breaking points. It should also test the system’s recovery behavior after overload.

-

Serving Degraded Results: Implement mechanisms to gracefully degrade service quality under load. Instead of crashing or failing entirely, the server should be designed to serve lower-quality, less resource-intensive results to users during peak load. This strategy is highly service-specific. For example, a search service could return results from a faster, less comprehensive index or use a simplified ranking algorithm.

-

Server-Side Request Rejection (Load Shedding): Equip servers to proactively protect themselves from overload. When a server, at either the frontend or backend layer, detects overload conditions, it should reject new requests early and efficiently. This prevents resource exhaustion and potential crashes. Implement mechanisms to monitor key metrics like CPU usage, memory consumption, and queue lengths to trigger load shedding.

-

Higher-Level Request Rejection: Implement request rejection mechanisms at higher levels in the system, such as reverse proxies and load balancers. This prevents requests from even reaching overloaded servers. Rate limiting can be applied at various levels:

- Reverse Proxies: Limit request volume based on criteria like IP address to mitigate denial-of-service attacks or abusive clients.

- Load Balancers: Drop requests when the overall service is globally overloaded. This rate limiting can be indiscriminate (“drop all traffic above X QPS”) or more selective (“prioritize requests from interactive user sessions and drop background synchronization requests”).

- Individual Tasks: Limit request processing at the task level to prevent load fluctuations from overwhelming individual servers.

-

Capacity Planning: Proactive capacity planning is essential to minimize the likelihood of overload. Capacity planning should be coupled with performance testing to accurately determine the load thresholds at which the service starts to degrade or fail. For example, if each cluster’s capacity limit is 5,000 QPS, and the service’s peak load is 19,000 QPS, then approximately six clusters (N+2 redundancy) are needed. While capacity planning reduces the risk, it’s not a foolproof defense against all cascading failures. Unexpected events like infrastructure failures, network partitions, or sudden traffic surges can still create overload situations. Auto-scaling systems that dynamically adjust server capacity based on demand can help, but proper capacity planning remains a fundamental requirement.

Queue Management: Balancing Responsiveness and Stability

Most thread-per-request servers utilize a queue in front of a thread pool to manage incoming requests. Requests are placed in the queue, and worker threads from the thread pool pick up requests for processing. When the queue is full, the server should ideally reject new requests to prevent further overload.

In an ideal scenario with constant request rates and processing times, queuing should be minimal. A fixed number of threads should be sufficient to handle the steady-state load. Queuing becomes necessary only when the incoming request rate exceeds the server’s sustained processing capacity, leading to saturation of both the thread pool and the queue.

However, queues themselves consume memory and introduce latency. If the queue size is excessively large (e.g., 10 times the thread pool size), a request might spend a significant portion of its total processing time waiting in the queue. For systems with relatively stable traffic patterns, smaller queue lengths (e.g., 50% or less of thread pool size) are generally preferable. This allows the server to reject requests early when it cannot sustain the incoming rate, promoting stability. Conversely, systems with highly “bursty” traffic might benefit from larger queues, sized based on thread pool size, request processing times, and burst characteristics. Gmail, for example, often employs “queueless” servers, relying on failover to other server tasks when threads are fully occupied.

Load Shedding and Graceful Degradation: Controlled Overload Management

Load shedding is the practice of intentionally dropping a portion of incoming traffic as a server approaches overload. The goal is to prevent the server from succumbing to resource exhaustion, health check failures, or excessive latency while still maximizing the amount of useful work it can perform.

A straightforward load shedding technique is per-task throttling based on metrics like CPU usage, memory consumption, or queue length. Limiting queue length, as discussed earlier, is a form of load shedding. A common and effective approach is to return an HTTP 503 (Service Unavailable) error to new requests when the number of in-flight requests exceeds a predefined threshold.

More advanced load shedding strategies involve modifying queue management algorithms. Switching from the standard First-In, First-Out (FIFO) queue to Last-In, First-Out (LIFO) or using algorithms like Controlled Delay (CoDel) can prioritize newer requests and discard older, potentially stale requests that are unlikely to be useful. For example, if a web search request is significantly delayed due to queueing, the user might have already refreshed the page and sent a new request. Processing the original, now outdated, request is wasteful. This strategy is particularly effective when combined with RPC deadline propagation.

More sophisticated load shedding approaches might selectively drop requests based on client identity or prioritize requests deemed more important. Such strategies are often necessary for shared services serving diverse client types with varying priorities.

Graceful degradation takes load shedding a step further by actively reducing the amount of processing work required. In some applications, it’s possible to significantly reduce resource consumption by sacrificing response quality. For instance, a search application could search only a subset of its data (e.g., an in-memory cache) or employ a less accurate but faster ranking algorithm during overload conditions.

When considering load shedding and graceful degradation for your service, carefully evaluate:

- Trigger Metrics: Which metrics will indicate when load shedding or graceful degradation should activate (e.g., CPU usage, latency, queue length, thread count)? Should the system enter degraded mode automatically, or is manual intervention required?

- Degraded Mode Actions: What specific actions will be taken when the server enters degraded mode (e.g., serving reduced quality results, dropping specific types of requests)?

- Implementation Layer: At which layer(s) in the system stack should load shedding and graceful degradation be implemented? Is it necessary at every layer, or is a high-level “choke point” sufficient?

During evaluation and deployment, remember these key considerations:

- Infrequent Triggering: Graceful degradation should be an exception, triggered only in cases of capacity planning failures or unexpected load shifts. Keep the implementation simple and easily understandable, especially if it’s rarely used.

- Code Path Exercise: Code paths for graceful degradation that are rarely executed are prone to bugs. Regularly exercise the graceful degradation logic by intentionally running a small subset of servers near overload to ensure its continued functionality.

- Monitoring and Alerting: Implement monitoring and alerting to detect when servers enter degraded modes too frequently, indicating potential underlying issues.

- Complexity Management: Complex load shedding and graceful degradation mechanisms can introduce their own problems, potentially causing unintended degraded modes or feedback loops. Design mechanisms for quickly disabling complex degradation or tuning parameters if needed. Storing configuration in a consistent, watchable system like Chubby can speed up deployments but introduces risks of synchronized failures.

Retries: A Double-Edged Sword

Naive retry implementations can significantly exacerbate cascading failures. Consider a frontend service that naively retries backend RPC calls upon failure, with a fixed retry limit.

func exampleRpcCall(client pb.ExampleClient, request pb.Request) *pb.Response {

// Set RPC timeout to 5 seconds.

opts := grpc.WithTimeout(5 * time.Second)

// Try up to 10 times to make the RPC call.

attempts := 10

for attempts > 0 {

conn, err := grpc.Dial(*serverAddr, opts...)

if err != nil {

// Something went wrong in setting up the connection. Try again.

attempts--

continue

}

defer conn.Close()

// Create a client stub and make the RPC call.

client := pb.NewBackendClient(conn)

response, err := client.MakeRequest(context.Background, request)

if err != nil {

// Something went wrong in making the call. Try again.

attempts--

continue

}

return response

}

grpclog.Fatalf("ran out of attempts")

}This seemingly innocuous retry logic can contribute to a cascading failure in this manner:

- Assume a backend service has a capacity limit of 10,000 QPS. Beyond this, it starts rejecting requests as part of its graceful degradation strategy.

- The frontend service starts sending

MakeRequestcalls at a constant rate of 10,100 QPS, slightly overloading the backend by 100 QPS. The backend rejects these 100 excess requests. - The frontend’s retry mechanism kicks in. These 100 failed requests are retried every 1000ms (or some other retry interval). Many of these retries succeed. However, the retries themselves add to the overall request volume reaching the backend. The backend now receives 10,200 QPS – 200 QPS above its capacity, leading to even more rejections.

- The volume of retries grows exponentially. The initial 100 QPS of retries in the first second leads to 200 QPS in the next, then 300 QPS, and so on. A decreasing proportion of initial requests succeed on their first attempt. Less and less useful work is performed relative to the total requests sent to the backend.

- If the backend service cannot handle this escalating load, consuming file descriptors, memory, and CPU time processing both successful and failing requests, it can become overwhelmed and crash. This backend crash then redistributes its load across the remaining backend instances, further overloading them and accelerating the cascade.

While this is a simplified illustration, it highlights the danger of uncontrolled retries. Both sudden load spikes and gradual traffic increases can trigger this retry-induced destabilization.

Even if the initial call rate to MakeRequest decreases back to pre-meltdown levels (e.g., 9,000 QPS), the problem might persist. This is because:

- If the backend expends significant resources processing requests that are destined to fail due to overload, the retries themselves might be sustaining the backend in an overloaded state.

- The backend servers themselves might become unstable due to resource exhaustion caused by processing failed requests and retries.

In such scenarios, simply reducing the initial request rate might not be enough. To recover, you must drastically reduce or completely eliminate the load on the frontends until the retries subside and the backends stabilize.

This pattern of retry-induced cascading failures has been observed in various contexts, including frontend-backend RPC communication, client-side JavaScript retries to endpoints, and aggressive offline synchronization protocols with retries.

To mitigate the risks of retries, consider these guidelines:

- Implement Backend Protection Strategies: Employ the server overload prevention techniques discussed earlier, such as load testing and graceful degradation.

- Randomized Exponential Backoff: Always use randomized exponential backoff for retry intervals. This prevents retry storms where retries from multiple clients synchronize and amplify each other, as described in the AWS Architecture Blog post “Exponential Backoff and Jitter”.

- Limit Retries Per Request: Do not retry a given request indefinitely. Set a maximum number of retries.

- Server-Wide Retry Budget: Implement a server-wide retry budget. For example, limit the total number of retries per minute within a process. If the budget is exceeded, fail requests instead of retrying. This can contain retry amplification and prevent a localized capacity issue from escalating into a global outage.

- Holistic Service View: Evaluate the necessity of retries at each level of the system. Avoid cascading retries by retrying at multiple layers. If each layer (database, backend, frontend, client) retries three times, a single user action can generate 64 attempts (4^3) on the database, which is highly undesirable when the database is already overloaded and returning errors.

- Clear Response Codes: Use clear and specific response codes to differentiate between retriable and non-retriable errors. Do not retry permanent errors or malformed requests, as they will never succeed. Return specific status codes indicating overload to signal clients and other layers to back off and avoid retries.

During an active outage, identifying retry storms as the root cause can be challenging. Graphs of retry rates can be indicative, but they might be misinterpreted as symptoms rather than causes. Mitigation typically involves code changes to fix retry behavior or drastic load reduction.

Latency and Deadlines: Managing Request Lifecycles

When a frontend service sends an RPC to a backend, the frontend consumes resources while waiting for a response. RPC deadlines define the maximum time a frontend will wait, limiting the backend’s resource consumption on behalf of the frontend.

Deadline Selection

Setting deadlines is crucial. No deadline or excessively long deadlines can allow transient issues to consume server resources long after the originating problem has resolved.

Long deadlines can lead to resource exhaustion at higher levels of the stack when lower levels are experiencing problems. Conversely, overly short deadlines can cause legitimate, but time-consuming requests to fail consistently. Choosing an appropriate deadline involves balancing these competing constraints.

Missed Deadlines: Wasted Effort

A common pattern in cascading outages is servers continuing to process requests that have already exceeded their client-side deadlines. This results in wasted resources as the server expends effort on requests for which it will receive no “credit” – the client has already given up and moved on.

If an RPC has a 10-second deadline set by the client, and a server is overloaded, causing it to take 11 seconds just to move a request from the queue to a worker thread, the client has already timed out. In such cases, the server should ideally abandon processing the request immediately, as any further work is futile.

If request processing involves multiple stages (e.g., parsing, backend RPC calls, processing), the server should check the remaining deadline before proceeding with each stage. This proactive deadline checking prevents wasted computation on doomed requests.

Deadline Propagation: End-to-End Context

Instead of setting arbitrary deadlines for backend RPCs, servers should implement deadline propagation.

With deadline propagation, the initial request at the highest level of the stack (e.g., the frontend) is assigned a deadline. All subsequent RPCs initiated as part of processing this request inherit the same absolute deadline, adjusted for the elapsed processing time. For example, if server A sets a 30-second deadline and spends 7 seconds processing before making an RPC call to server B, the RPC to server B will have a remaining deadline of 23 seconds. If server B takes 4 seconds and then calls server C, the RPC to C will have a 19-second deadline, and so on. Ideally, every server in the request path implements deadline propagation.

Without deadline propagation, issues can arise. Consider:

- Server A sends an RPC to server B with a 10-second deadline.

- Server B takes 8 seconds to start processing and then makes an RPC call to server C.

- If server B doesn’t use deadline propagation and sets a fixed 20-second deadline for the RPC to C.

- Server C starts processing the request after a 5-second queue delay.

If server B had used deadline propagation, the RPC to server C would have had a deadline of only 2 seconds (10s – 8s). Server C could have immediately rejected the request because the deadline was already exceeded. However, without propagation, server C incorrectly believes it has 15 seconds (20s – 5s) remaining and wastes resources processing a doomed request.

You might want to slightly reduce outgoing deadlines (e.g., by a few hundred milliseconds) to account for network transit time and client-side post-processing.

Consider also setting upper bounds on outgoing deadlines, especially for RPCs to non-critical backends or those expected to complete quickly. However, carefully analyze your traffic patterns to avoid inadvertently causing failures for specific request types (e.g., large payloads or computationally intensive requests).

Exceptions exist where servers might need to continue processing beyond a deadline. For example, for long-running operations with periodic checkpoints, deadline checks might be more appropriate after checkpointing rather than before computationally expensive steps.

Cancellation Propagation: Terminating Unnecessary Work

Cancellation propagation complements deadline propagation by proactively signaling to servers down the RPC call chain that their work is no longer needed. Some systems use “hedged requests” to mitigate latency, sending the same request to multiple servers and cancelling redundant requests once a response is received. Cancellation propagation ensures that these cancellations cascade down the entire request tree, preventing wasted work across the system.

Cancellation propagation also addresses scenarios where a long initial deadline might mask issues in deeper layers of the stack. If a deeply nested RPC fails with a non-retriable error or a short timeout, deadline propagation alone might not prevent the initial request from continuing to consume resources until its long deadline expires. Propagating fatal errors or timeouts upwards and cancelling related RPCs in the call tree prevents wasted work when the overall request is destined to fail.

Bimodal Latency: The Hidden Threat

Consider a frontend service with 10 servers, each with 100 worker threads (1,000 total threads). Normally, it handles 1,000 QPS with 100ms latency, occupying 100 threads (1,000 QPS * 0.1s).

Now, suppose 5% of requests begin to never complete due to, for example, unavailable data in a backend. These 5% hit their deadlines (say, 100 seconds), while the remaining 95% complete normally in 100ms.

With a 100-second deadline, these 5% of long-latency requests would consume 5,000 threads (50 QPS * 100s). However, the frontend only has 1,000 threads. Assuming no other cascading effects, the frontend can only handle approximately 19.6% of the intended load (1,000 threads / (5,000 + 95) threads’ worth of work), leading to an 80.4% error rate.

Instead of just 5% errors (for the requests hitting the unavailable data), most requests now fail due to thread exhaustion.

To address bimodal latency issues:

- Latency Distribution Monitoring: Detecting this problem can be difficult using just average latency metrics. Monitor the distribution of latencies, not just averages, to identify bimodal patterns when latency increases.

- Fail-Fast Mechanisms: Requests that are destined to fail (e.g., backend unavailable) should return errors immediately rather than waiting for the full deadline. Use “fail-fast” options in RPC layers if available.

- Appropriate Deadlines: Avoid setting deadlines that are orders of magnitude longer than typical request latency. In the example above, the 100-second deadline (1000x longer than 100ms latency) exacerbated the thread exhaustion problem.

- Resource Abuse Tracking: When using shared resources susceptible to exhaustion by specific keyspaces or clients, consider limiting in-flight requests per keyspace or implementing abuse tracking. For services serving diverse clients with varying performance profiles, limit the resource share (e.g., threads) any single client can consume to ensure fairness under heavy load.

Slow Startup and Cold Caching: Initial Performance Bottlenecks

Processes often exhibit slower performance immediately after startup compared to their steady-state performance. This can be due to:

- Initialization Overhead: Setting up connections to backends or other dependencies upon the first request requiring them.

- Runtime Performance Optimization (Java): Java’s Just-In-Time (JIT) compilation, hotspot optimization, and deferred class loading lead to performance improvements over time as the application “warms up.”

- Cache Warm-up: Services relying heavily on caching are less efficient with cold caches. Cache misses are significantly more expensive than cache hits. In steady-state, cache hit rates are high, but after a restart or in a new cluster, caches are initially empty, leading to 100% cache misses. Some services use caches to maintain user state in RAM, often achieved through reverse proxy stickiness to frontend instances.

If a service isn’t provisioned to handle requests under cold cache conditions, it becomes more vulnerable to outages.

Cold cache scenarios can arise from:

- New Cluster Deployment: Newly deployed clusters have empty caches.

- Maintenance and Cluster Return: Clusters returning to service after maintenance might have stale or empty caches.

- Restarts: Task restarts lead to cache invalidation.

To mitigate cold cache issues:

- Overprovisioning: Provision the service with sufficient capacity to handle expected load even with cold caches. Distinguish between latency caches (improving response time but not essential for capacity) and capacity caches (essential for handling expected load). Services relying on capacity caches are particularly vulnerable to cold cache problems. Ensure new caches are either latency caches or robustly engineered capacity caches. Be vigilant when adding caches, as they can inadvertently become hard dependencies.

- Cascading Failure Prevention: Implement general cascading failure prevention strategies like load shedding and graceful degradation. Thoroughly test service behavior after events like large-scale restarts.

- Gradual Load Ramp-up: When adding load to a new cluster or after a restart, gradually increase traffic to allow caches to warm up. Ensure all clusters maintain a nominal load to keep caches warm. Consider using external caching services like memcache to share caches across servers, albeit with the added latency of an RPC call.

Always Go Downward in the Stack: Request Flow Directionality

In a typical layered service architecture (e.g., frontend -> backend -> storage), problems in lower layers (storage) can impact upper layers (backend, frontend). However, resolving issues in the storage layer typically resolves problems in the layers above it.

Intra-layer communication (e.g., backends communicating directly with each other) can introduce complexities and risks. For example, backends might proxy requests to each other to handle user ownership changes if the storage layer is unavailable. This can be problematic due to:

- Distributed Deadlocks: Intra-layer communication can lead to deadlocks if backends use shared thread pools to wait for RPCs from other backends that are simultaneously sending requests to them. Thread pool saturation can propagate across layers.

- Amplified Load Under Failure: Intra-layer communication might increase under failure conditions. For instance, a primary backend might proxy requests to a hot standby secondary backend in another cluster during errors or high load. If the system is already overloaded, this proxying adds further load due to request parsing and waiting for responses from the secondary, exacerbating the overload.

- Bootstrapping Complexity: Intra-layer dependencies can complicate system bootstrapping and startup procedures.

Avoid intra-layer communication in user request paths to prevent cycles and potential cascading issues. Instead of backend-to-backend proxying, have the backend inform the frontend to retry the request with the correct backend instance. Client-driven communication is generally preferable to intra-layer proxying.

Triggering Conditions for Cascading Failures

Several events can initiate cascading failures in susceptible services.

Process Death

Server task failures reduce overall capacity. Tasks can die due to various reasons: “Queries of Death” (malformed requests triggering crashes), cluster-level issues, assertion failures, etc. Even a small number of task failures can trigger a cascade in a service operating near its capacity limit.

Process Updates

Rolling out new binary versions or configuration updates can trigger cascades if a large number of tasks are updated simultaneously. Account for capacity overhead during updates or perform updates during off-peak hours. Dynamically adjust the update rate based on request volume and available capacity.

New Rollouts

New binaries, configuration changes, or infrastructure stack changes can alter request profiles, resource usage, backend dependencies, and other system characteristics, potentially triggering cascades. During an outage, check for recent changes and consider reverting them, especially those affecting capacity or request profiles. Implement change logging to quickly identify recent deployments.

Organic Growth

Gradual increases in usage without corresponding capacity adjustments can eventually push a service over its breaking point and trigger a cascade.

Planned Changes, Drains, and Turndowns

Planned maintenance, cluster drains, or turndowns can reduce available capacity. If a service is multi-homed, maintenance or outages in one cluster can impact overall capacity. Drains of critical dependencies can also reduce upstream service capacity or increase latency due to traffic rerouting to distant clusters.

Request Profile Changes

Changes in request patterns can trigger cascades. Backend services might receive requests from different clusters due to load balancing adjustments, traffic shifts, or cluster fullness. The average processing cost per request payload can change due to frontend code or configuration changes. Data handled by the service can also evolve organically (e.g., increased image sizes for a photo storage service).

Resource Limits and Overcommitment

Some cluster operating systems allow resource overcommitment (e.g., CPU). While slack CPU capacity can provide a temporary safety margin, relying on it is risky. Slack CPU availability depends on the behavior of other jobs in the cluster and can fluctuate unpredictably. During load tests, ensure you stay within your committed resource limits, not relying on overcommitted resources.

Testing for Cascading Failures: Proactive Resilience

Predicting service failure modes solely from design principles is difficult. Testing is crucial to identify vulnerabilities to cascading failures.

Test your service under heavy load to build confidence in its resilience.

Test to Failure and Beyond

Understanding service behavior under overload is paramount. Load test components until they break to identify breaking points and failure modes. Ideally, overloaded components should gracefully degrade or serve errors without significantly reducing successful request processing rates. Components prone to cascading failures will crash or exhibit high error rates under overload. Well-designed components should reject requests and remain stable.

Load testing reveals breaking points, essential for capacity planning. It also enables regression testing and allows for trading off utilization versus safety margins.

Consider both gradual and impulse load patterns due to caching effects. Test service recovery after overload:

- Does degraded mode automatically recover?

- How much load reduction is needed to stabilize a system after server crashes?

For stateful or caching services, load tests should track state across interactions and verify correctness under high concurrency, where subtle bugs often manifest.

Test individual components separately to identify their specific breaking points.

Consider production testing in small slices to validate failure behavior under real traffic. Real traffic tests, while riskier, provide more realistic results than synthetic load tests. Be prepared with extra capacity and manual failover options. Production tests can include:

- Gradual or rapid task count reduction beyond expected traffic patterns.

- Simulating loss of a cluster’s capacity.

- Blackholing backend services.

Test Popular Clients

Understand how major clients use your service:

- Do clients queue requests during service downtime?

- Do they use randomized exponential backoff on errors?

- Are they vulnerable to external triggers causing sudden load spikes (e.g., client-side cache invalidation after software updates)?

Understanding client behavior, even for external clients you don’t control, is crucial. Test system failures with your largest clients to observe their reactions. Inquire with internal clients about their access patterns and failure handling mechanisms.

Test Noncritical Backends

Test the impact of noncritical backend failures. Ensure their unavailability doesn’t impact critical service components. Requests involving both critical and noncritical backends can be slowed down or resource-constrained by noncritical backend issues.

Test frontend behavior when noncritical backends are unavailable or unresponsive (e.g., blackholed). Frontends should not exhibit cascading failures, resource exhaustion, or high latency when noncritical backends fail.

Immediate Steps to Address Cascading Failures: Incident Response

When a cascading failure occurs, swift action is required. Utilize your incident management protocol.

Increase Resources

If capacity degradation is the issue and idle resources are available, adding tasks is the quickest recovery method. However, in a “death spiral,” adding resources alone might not be sufficient.

Stop Health Check Failures/Deaths

Cluster schedulers might restart “unhealthy” tasks. In a cascading failure, health checks themselves might contribute to the problem. If overloaded tasks are failing health checks and being restarted, temporarily disabling health checks might allow the system to stabilize until all tasks are running.

Distinguish between process health checks (is the binary running?) and service health checks (can the service handle requests?). Process health checks are for schedulers, service health checks for load balancers. Clear separation prevents health checking from exacerbating the cascade.

Restart Servers

Restarting servers can be helpful if servers are “wedged” or unresponsive:

- Java servers in GC death spirals.

- In-flight requests without deadlines consuming resources.

- Server deadlocks.

However, identify the root cause before restarting. Restarting might just shift the load around. Canary restarts and proceed slowly. Restarts can worsen a cold cache-related outage.

Drop Traffic: The Last Resort

Dropping load is a drastic measure for severe cascading failures. If heavy load causes servers to crash as soon as they become healthy, recovery steps include:

- Address the initial trigger (e.g., add capacity).

- Aggressively reduce load (e.g., allow only 1% traffic) until crashing stops.

- Allow servers to become healthy.

- Gradually ramp up traffic.

This allows caches to warm up and connections to stabilize before full load returns. This tactic causes significant user impact. If possible, drop less critical traffic first (e.g., prefetching). Indiscriminate traffic dropping depends on service configuration. Dropping traffic is a recovery step after addressing the underlying issue. If the root cause is not resolved (e.g., insufficient capacity), the cascade will likely reoccur. First, address the root cause (e.g., add memory or tasks).

Enter Degraded Modes

Activate graceful degradation: serve lower-quality results or drop less important traffic. This requires pre-engineered service capabilities to differentiate traffic types and degrade gracefully.

Eliminate Batch Load

Disable non-critical batch processing tasks (index updates, data copies, statistics gathering) that consume serving path resources during an outage.

Eliminate Bad Traffic

Block or filter “bad traffic” (e.g., “Queries of Death” causing crashes).

Cascading Failure and Shakespeare Service Example

A Shakespeare documentary in Japan drives unexpected traffic to the Shakespeare service’s Asian datacenter, exceeding capacity. A simultaneous major service update in that datacenter compounds the issue.

Safeguards are in place: Production Readiness Reviews identified and addressed potential issues. Graceful degradation is implemented – the service stops returning images and maps to conserve resources under load. RPC timeouts and randomized exponential backoff retries are used. Despite this, tasks fail and restart, reducing capacity further.

Service dashboards trigger alerts, and SREs are paged. SREs temporarily add capacity to the Asian datacenter, restoring the service.

Postmortem analysis identifies lessons learned and action items: GSLB load balancing to redirect traffic to other datacenters during overload, and enabling autoscaling to automatically adjust task counts based on traffic, preventing recurrence.

Final Thoughts

When systems are overloaded, controlled degradation is preferable to catastrophic failure. Allowing some user-visible errors or reduced quality is better than complete service outage. Understanding breaking points and system behavior under overload is crucial for service owners.

Changes intended to improve steady-state performance (retries, load shifting, server restarts, caching) can inadvertently increase cascading failure risks. Evaluate changes carefully to avoid trading one type of outage for another. Be vigilant in testing and proactive in implementing robust failure prevention and mitigation strategies.